tl;dr

i created a genai video platform called OpenV in ~100 hours for CivitAI’s Project Odyssey (an ai film competition) by using ai:

- planning in ChatGPT o1

- frontend in v0

- everything else in Cursor agent

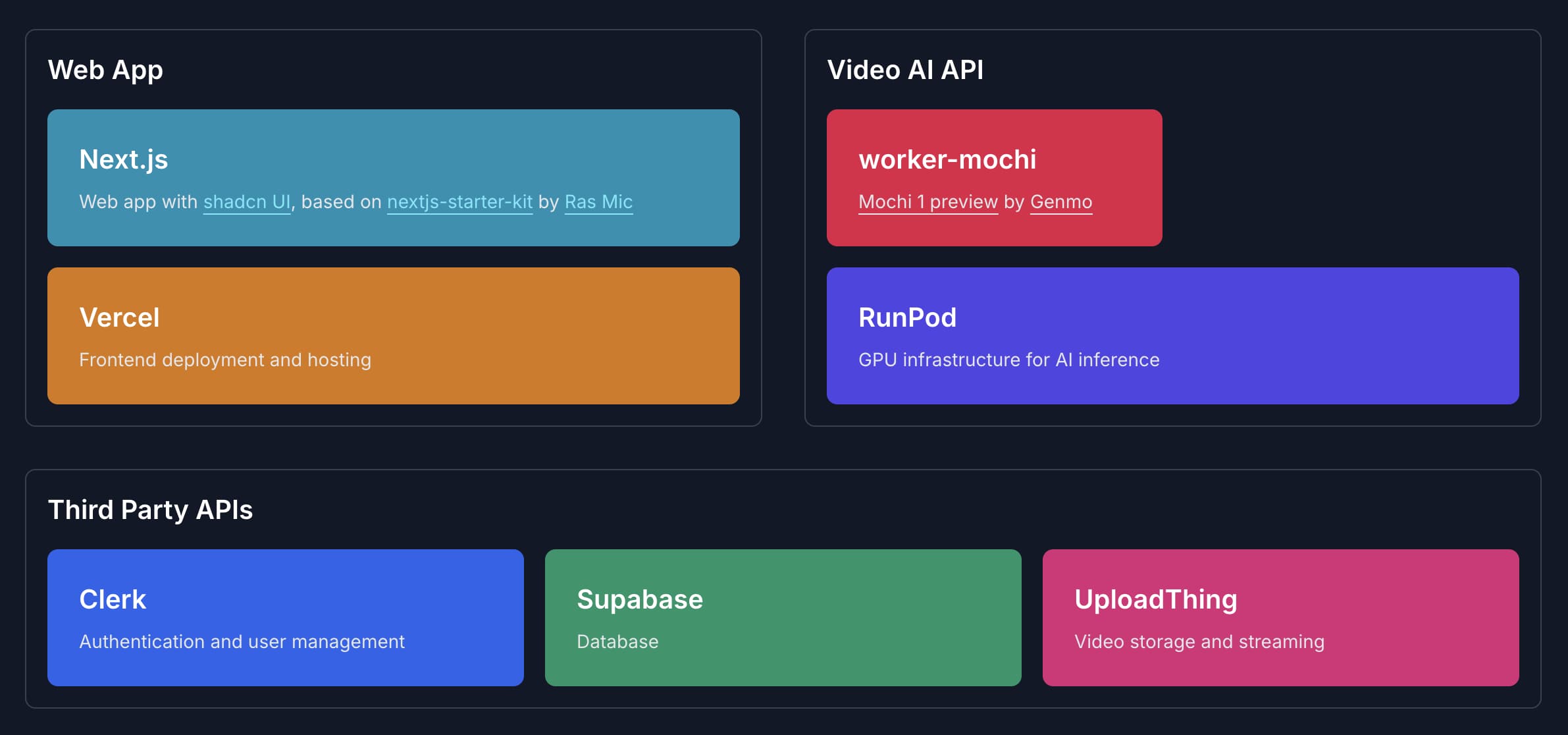

it’s built on top of the nextjs-starter-kit by Ras Mic (which comes with NextJS, Shadcn, Clerk, Supabase), deployed on Vercel & Runpod, using UploadThing as storage and Mochi as the model to generate videos.

OpenV consists of a web app and a model api, both open source under MIT.

the idea

when i saw rain1011/pyramid-flow-sd3 (an open source video generation model), the idea for a new side project was born: a platform called OpenV (open video) that can generate videos with open source ai models.

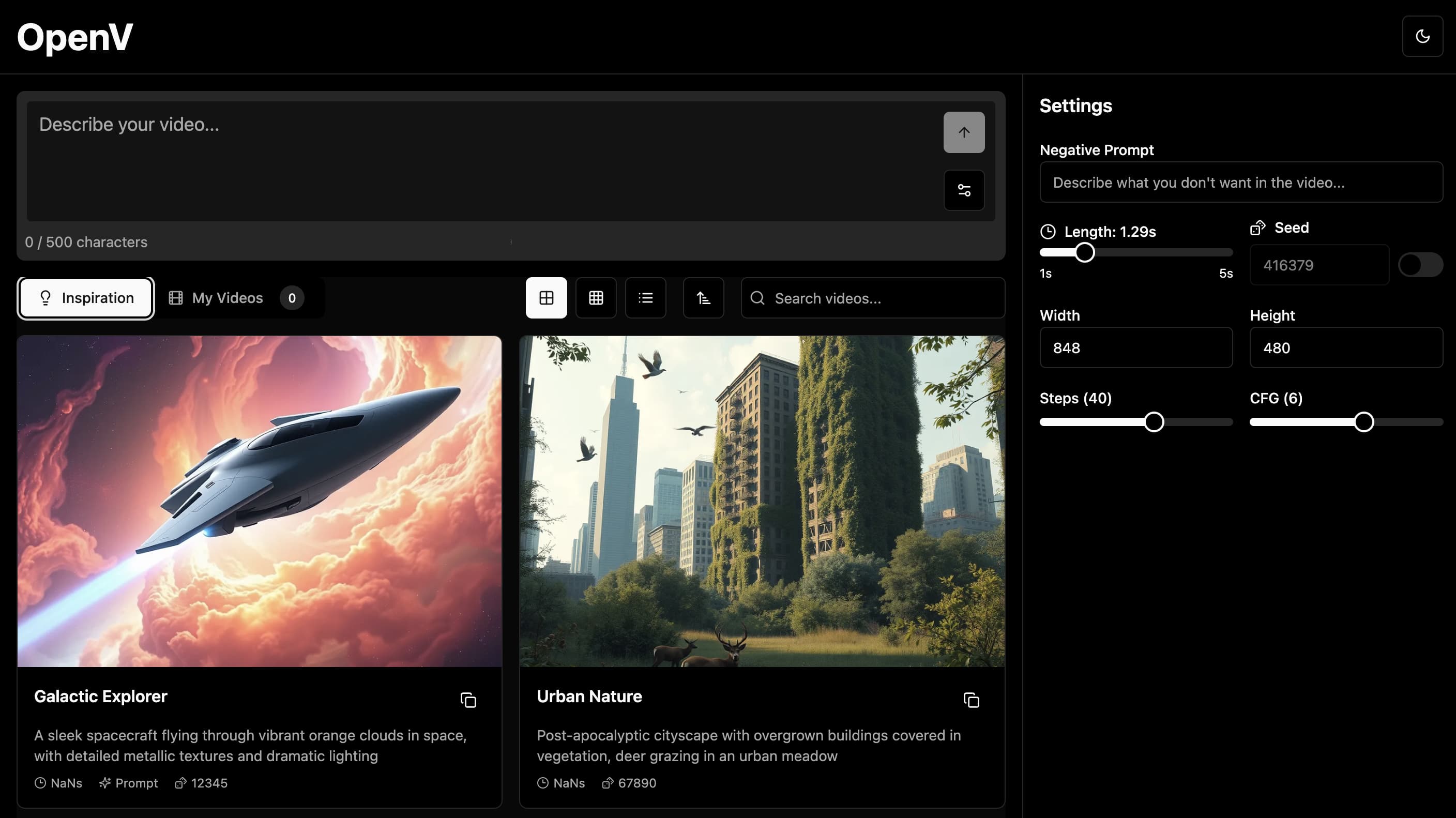

so i drafted a quick prototype using v0:

i want to build a website based on nextjs, shadcn, typescript. its name is “OpenV” and it’s a generative video web app using ai. we are building the homepage, which consists of:

- a large prompt area and a “generate” button and an element to change the length (5 or 10 seconds) and a checkbox to “enhance prompt”

- a tab area with “inspiration” and “my videos”

- inspiration showing in a grid videos from other users and how to guidelines

- my videos showing all videos that the user already has generated

- when the user clicks on generate, the video is added in some kind of queue and the queue is always visible

this prompt one-shotted this:

34 prompts later:

i then used pyramid-flow-sd3 via an api on Runpod, but did not connect the frontend with this api, and then the weekend was over.

project odyssey

CivitAI reached out to Runpod about becoming a gold sponsor for their ai film competition, Project Odyssey (dec 16, 2024 to jan 16, 2025), and we directly said yes.

one part of the sponsorship deal says that we have to provide our own video gen tool that can be used by contestants of Project Odyssey for free for the duration of the competition to generate up to 10 minutes of video.

as Runpod is a provider of infrastructure for ai companies, we had no such tool in our portfolio. this is why we decided to convert my side project OpenV into a full-blown product. the goal is to showcase the power of Runpod and provide educational resources for developers. but instead of using pyramid-flow-sd3, we decided to use Mochi as the video generation model, as it generates better high quality videos.

category: sponsored tools

Project Odyssey has its own category for sponsored tools, which means that videos generated with OpenV can be submitted to the competition, and Runpod (probably me plus community votes) will be judging them. the winners will receive prizes, so make sure to check this out if you want to win.

model as api

almost every ai product i start working on requires a specific open source model running as an api. when it’s working, i continue with the rest of the project.

converting mochi-1 into an api was straightforward, as i could get a lot of inspiration from camenduru/mochi-1-preview-tost (a Runpod-compatible worker serving Mochi with ComfyUI in python).

this is how a request to the api looks like:

{

"input": {

"positive_prompt": "a cat playing with yarn",

"negative_prompt": "",

"width": 848,

"height": 480,

"seed": 42,

"steps": 60,

"cfg": 6,

"num_frames": 31

},

"webhook": "https://openv.ai/api/runpod/webhook"

}

video storage

when the video is generated, it must be stored somewhere (as all of this runs serverless, so before the request is finished, the video will be deleted), so that it can be served to the user.

i first tried to use the blob storage from Vercel, but this had no steady connection (upload failed many times, even with a retry strategy).

then i switched over to UploadThing, which was working great. (i just don’t think that the python implementation of the api is correct. would you please help me out here, theo?)

response

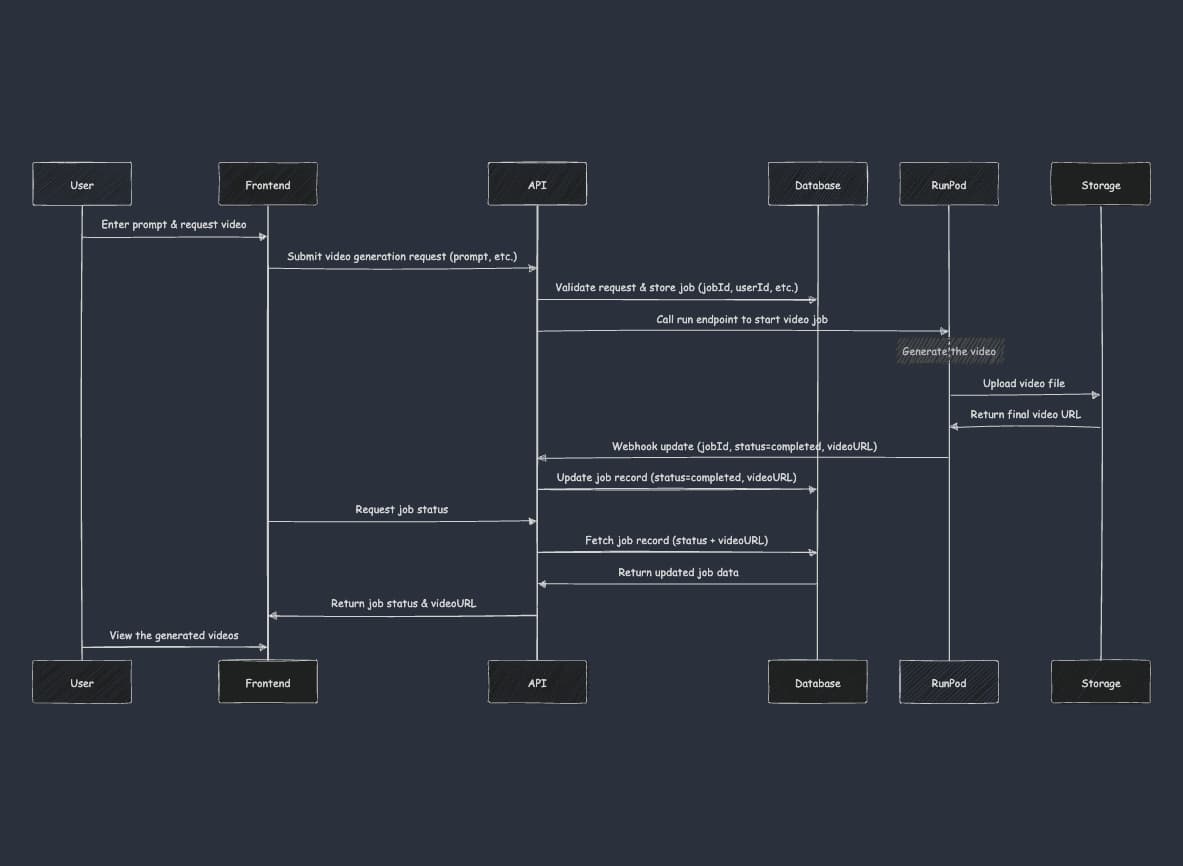

when the user wants to generate a video, OpenV will send a request to the api, which will add the request to a queue and return a job id. OpenV also provides a webhook endpoint that the api calls when the video is generated (or to inform about an error). once the video is generated, Runpod will provide this response to the webhook endpoint:

{

"id": "job-123",

"status": "COMPLETED",

"output": {

"result": "https://utfs.io/f/a655aa95-1f60-4809-8528-25a875d80539-92yd7b.mp4"

}

}

the web app

foundation

to get started quickly, i used nextjs-starter-kit from michael shimeles as the foundation. it comes packed with:

- NextJS + Shadcn/UI + TypeScript

- Clerk for authentication

- Supabase as database in combination with Prisma as orm

- Stripe for payments (but we’re not charging anything, so this isn’t needed)

it helps me a lot to have a visual representation of the project, so i created this system overview:

planning

i used ChatGPT o1 to create a high-level overview of the project and tried to describe how a typical workflow might look. this ended up in a nice sequence diagram showing the most important user interaction: generating a video.

i then broke down the project into various user stories together with ChatGPT o1.

one example is the “monthly quota” feature: it limits the user to generate 10 minutes of video per month. it also takes into account the custom date of the competition (dec 16, 2024 to jan 16, 2025):

user story

as a user, i want a monthly video generation limit that can also be enforced within custom date ranges, so i never exceed my allowed usage, and admins can easily adjust or reset these limits.

acceptance criteria

configurable window and limit

- the system reads a start date (

LIMIT_START_DATE) and end date (LIMIT_END_DATE) from environment variables.- if these are set, the limit applies only within that date range.

- if these are not set, the system defaults to a standard monthly cycle (usage resets on the 1st).

- the total allowed monthly usage (

MONTHLY_LIMIT_SECONDS) is also set via environment variables.

- the system reads a start date (

usage tracking and enforcement

- each user has a

monthlyUsagefield (in seconds) that increments whenever they create a job. - on each job creation (

POST /api/runpod):- check if the current date is within the custom date range (

LIMIT_START_DATEtoLIMIT_END_DATE).- if yes, enforce the usage limit for that range.

- if not, fallback to the standard monthly logic (usage resets on the 1st).

- compare

(current monthlyUsage + requestedVideoDuration)toMONTHLY_LIMIT_SECONDS.- if it exceeds the limit, return an error (403 or 409) with a clear message (“you have reached your monthly limit.”).

- otherwise, allow the job and add the requested video duration to

monthlyUsage.

- check if the current date is within the custom date range (

- each user has a

monthly reset or end-of-period behavior

- when the date range ends (

LIMIT_END_DATEis reached), the system reverts to the normal monthly cycle. - if no date range is defined, the system uses a standard monthly reset strategy (resetting

monthlyUsageto 0 on the 1st). - document how and when these resets occur so admins can update the usage policy if needed.

- when the date range ends (

documentation

- in

docs/system-overview.md, describe how the start date, end date, and monthly usage limit are read from environment variables. - provide examples: - a standard monthly cycle (no start/end date, 600 seconds limit). - a special date range (dec 16 – jan 16) with a higher or lower limit.

- in

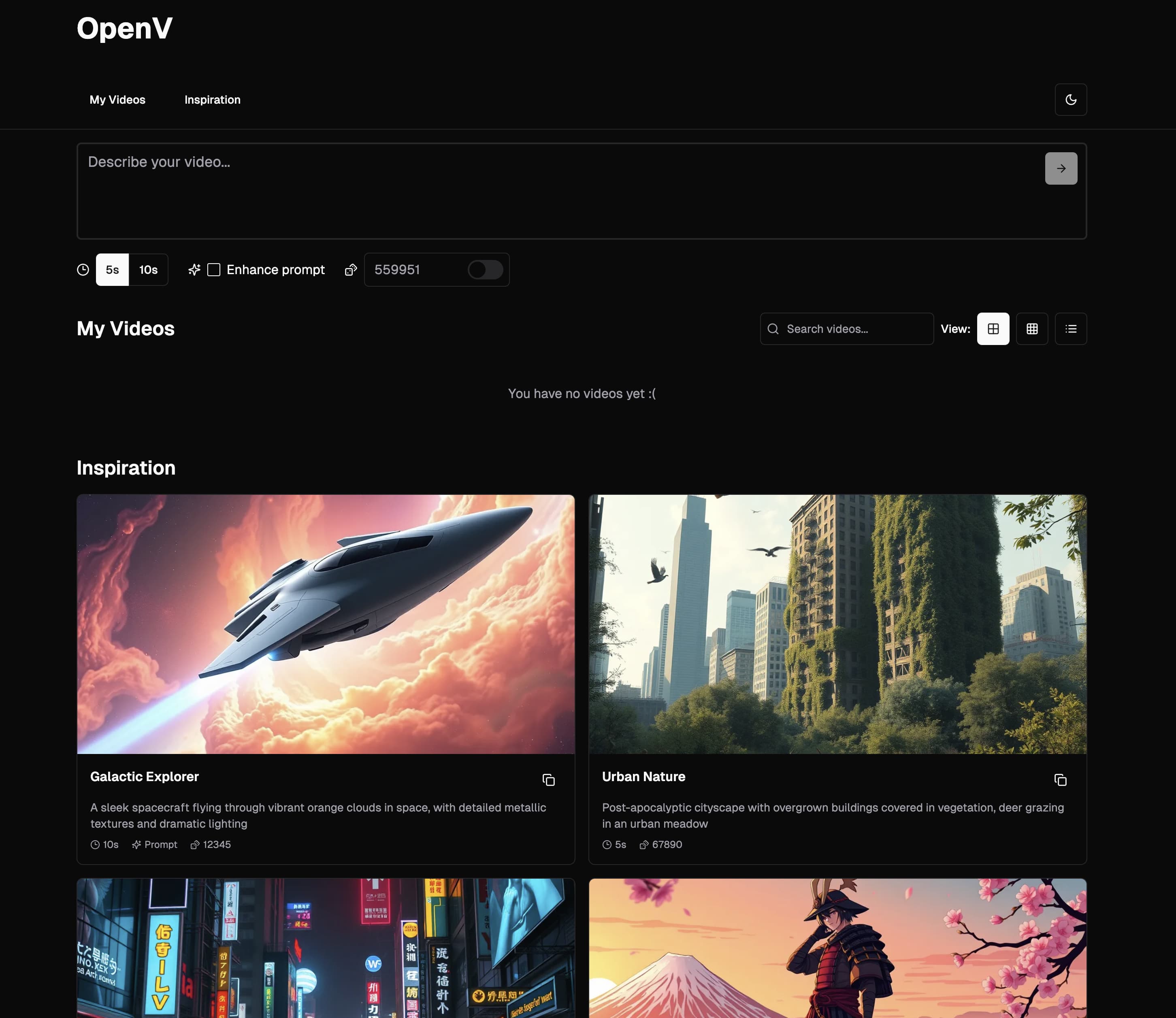

refining the frontend

after defining the foundation and the corresponding user stories, i went back into v0 to refine the prototype for OpenV. the goal was to make it more user-friendly and provide a place to control all the settings needed to generate videos with mochi.

78 prompts later:

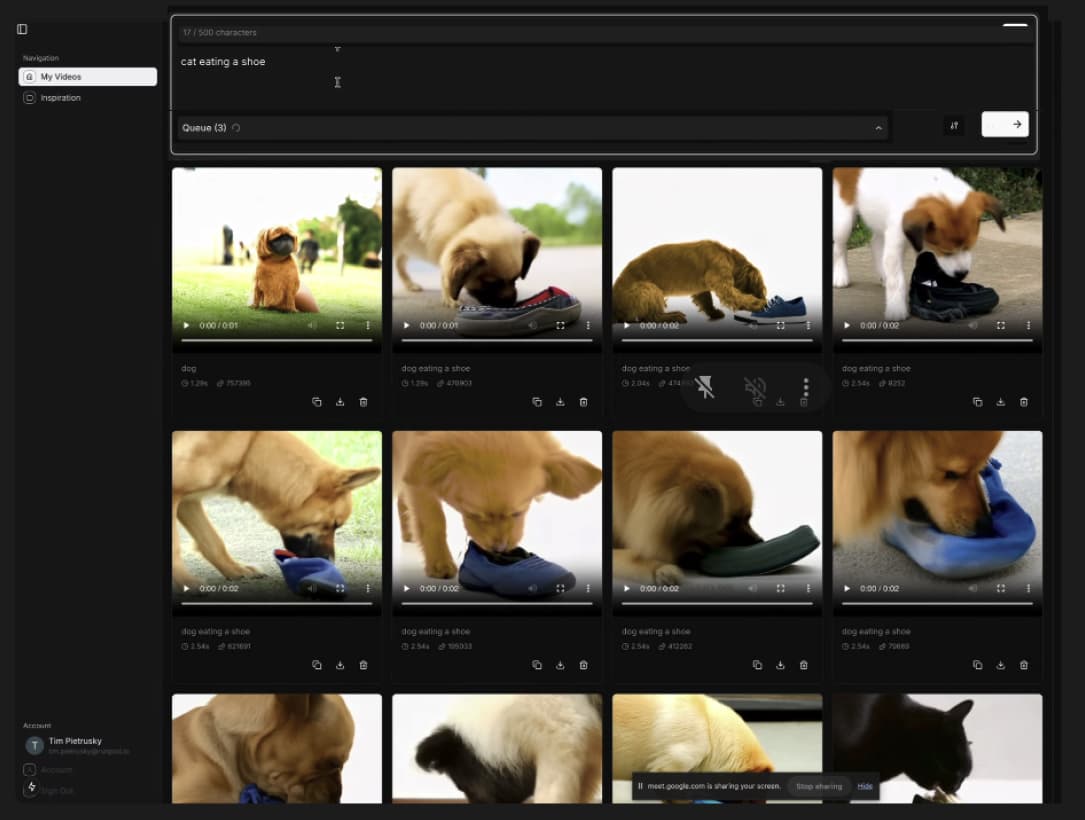

developing the whole thing

the next step was to get the generated frontend from v0 into the project with the help of Cursor (using the agent in composer).

then i added all the business logic based on the user stories:

- generate videos using the api deployed on Runpod

- basic job status checking (initial naive approach)

- webhook-based status updates with efficient polling

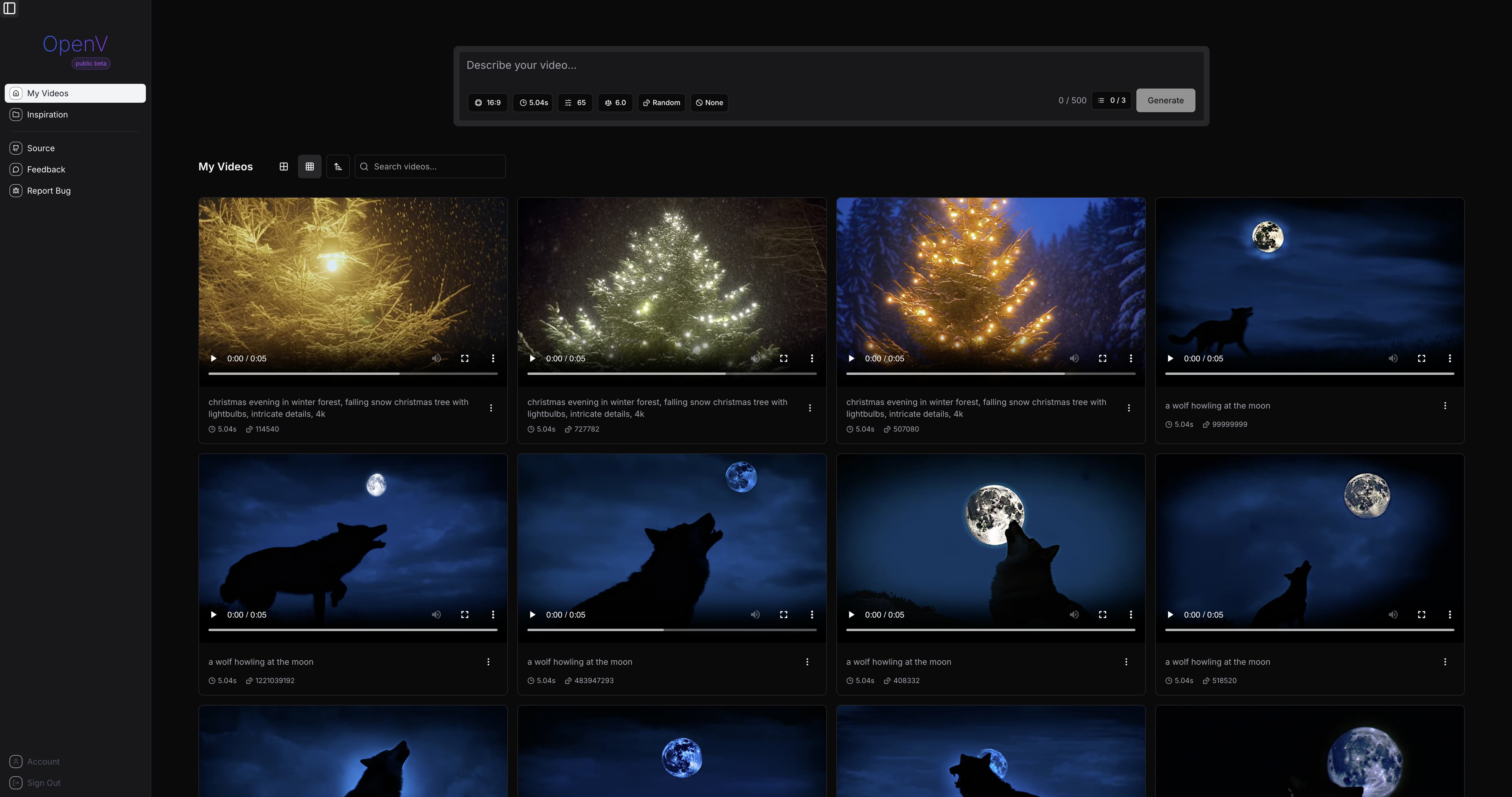

- “my videos” to show all generated videos

- concurrent job limits

- left sidebar to navigate between views

- “inspiration” view for prompt examples

- “terms of use” workflow with version control to enforce users to accept before they can use “my videos”

- account management view

- voucher system for platform access, so only “Project Odyssey” attendees can use OpenV for free

- “monthly quota” to restrict usage to 10 minutes of generated video per user

- proper landing page (generated with v0 and then refined with Cursor)

- unit & integration tests

improve with feedback

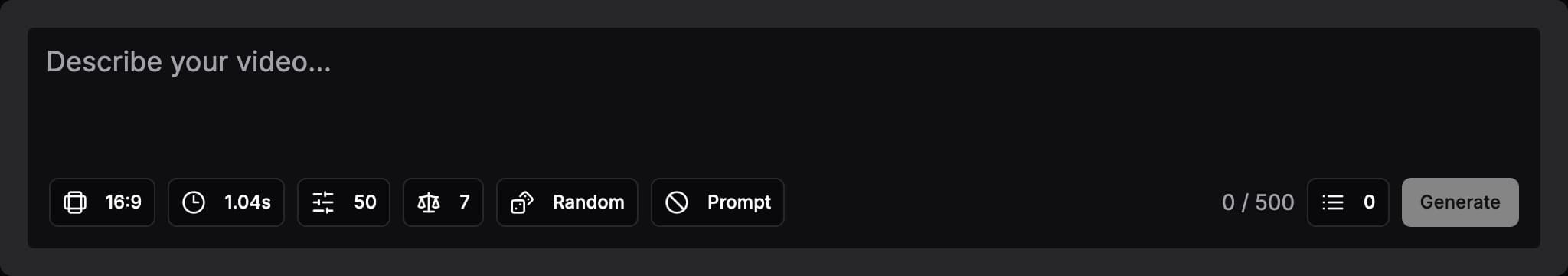

i spent 1h with frank myles (product designer at Runpod, thank you!) to refine the ux. we ended up with this ui mock:

the most important change was removing the settings panel on the right and instead integrating it into the prompt component. i took this back into v0 and generated a very concise version of the prompt.

33 prompts later:

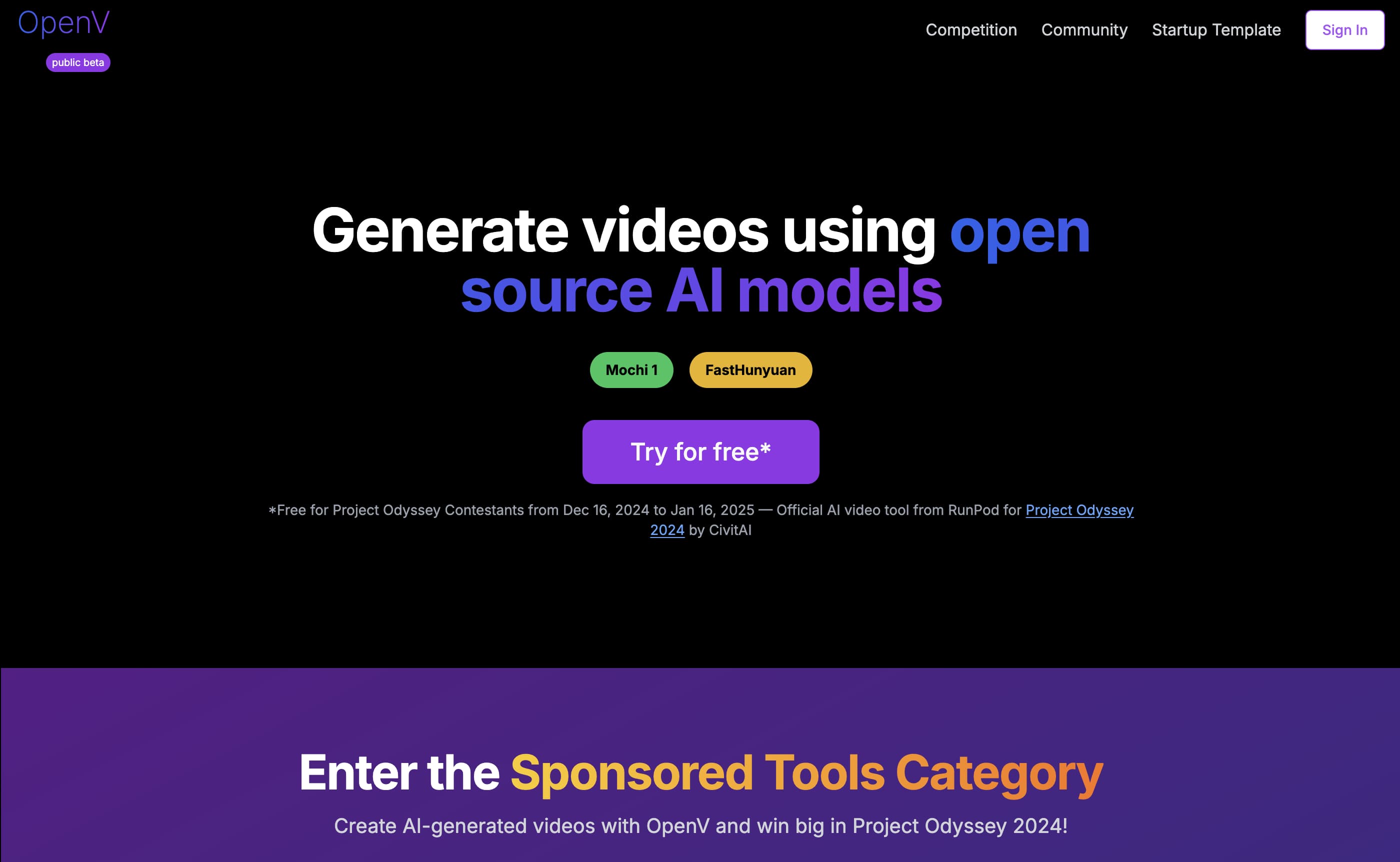

good enough landing page

went back to v0 to generate a proper landing page and finally put into words what OpenV is all about.

37 prompts later:

integrated the new landing page with Cursor into OpenV:

i have used another ai to generate a new home page in @new-home-page.tsx. please use this as our new @page.tsx but use our components (logo, title). also make sure to replace our @footer.tsx with the footer from @new-home-page.tsx.

pm & legal

on top of this, i was using ChatGPT o1 to generate all the tickets and made sure that the provided “terms of use” from legal are in sync with OpenV.

manual work

the only manual part was setting up the various accounts (as it was my first time using Clerk, Supabase, UploadThing), getting budget approved, having meetings with people, talking via slack, and making sure everything is deployed.

competition starts

participants receive access to all the tools for Project Odyssey via email.

OpenV is not ready yet, so the landing page says: we are still cooking.

release day!

OpenV officially launched three days after the competition started. this was needed because OpenV was not feature-complete yet and i needed to fix some “production only” bugs.

time tracking

let’s break down the time spent on OpenV:

- initial prototype (oct 26 - 27): ~2h

- model as api (nov 28 - dec 6): ~16h

- the web app (dec 9 - 18):

- one full weekend = ~18h

- ~8 working days with roughly 8h/day = ~64h

- total for this phase: ~84h

which results in around ~100h total.

i spent a lot of time getting the tests working right, so if you want to be even quicker, you could skip them for the mvp and add them after launch. but for me personally, i feel more comfortable running tests than testing everything manually.

what’s shipping next?

- nsfw filter for the prompt

- ui performance improvements

- cookie notice to be gdpr compliant

- FastHunyuan model for video generation

- web analytics with PostHog

how to use an agent?

while working on this project, i learned a lot about how to work with an agent (running on Claude-3.5-sonnet inside Cursor):

- provide a lot of context (like

.cursorrules& docs) so the agent goes in the same direction you want. - do the review on the spot and try to understand the changes. don’t just approve everything if you want to build a high-quality product. if you don’t, you might end up solving the same problem in a different way over and over.

- when the agent does something “wrong” from your perspective, try to understand why it did that and then update your context (like adding a new rule in

.cursorrules) to prevent it from happening again. - treat commits as checkpoints and don’t be afraid to revert to them if you want to go back.

build your own ai startup

all of this is open source, as we want to empower developers around the world to build on top of OpenV and create their own ai startup.

- web app: OpenV (MIT)

- model api: worker-mochi (MIT)

conclusion

we are living in amazing times, where one person can build and release a full-fledged ai startup in just ~100h by using ai.

what will this number be at the end of 2025?

thanks

i’m so grateful to be working at Runpod (thanks to everyone there), because i can create cool ai stuff with awesome people.

can someone pinch me, please?